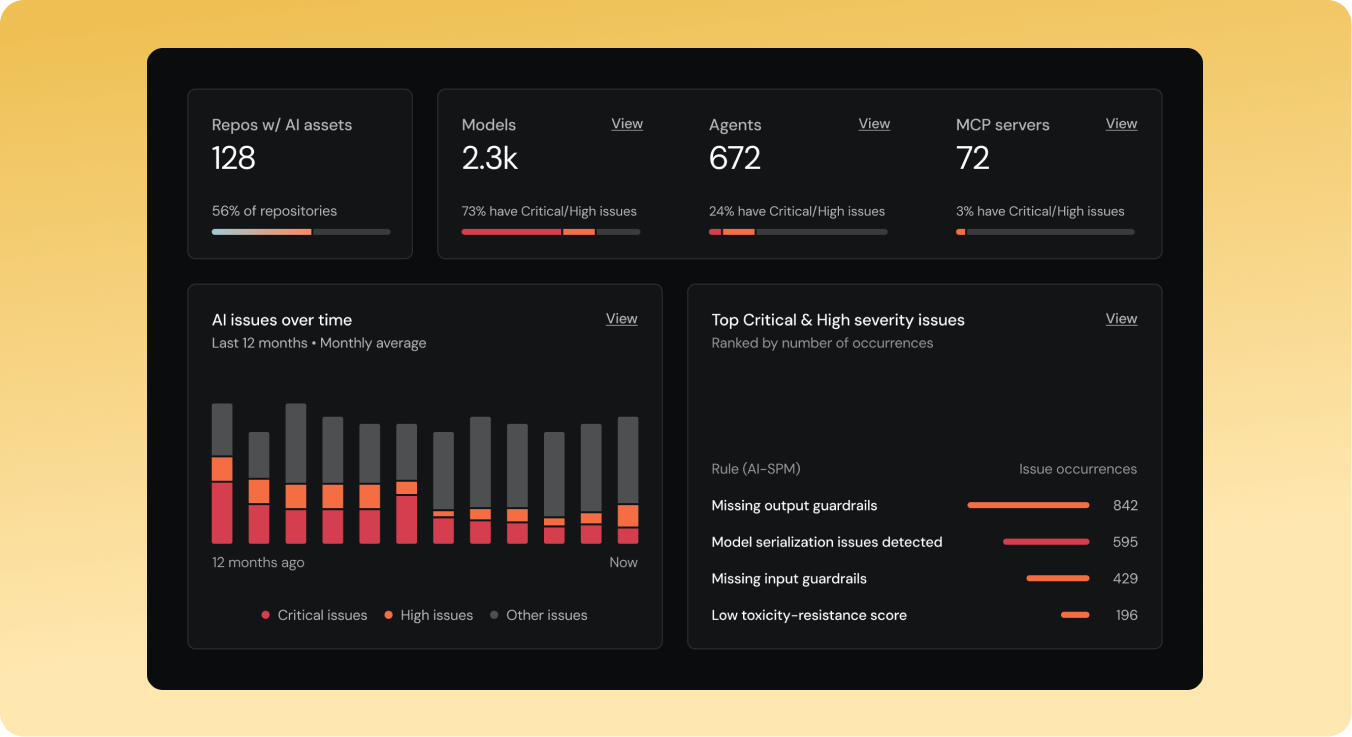

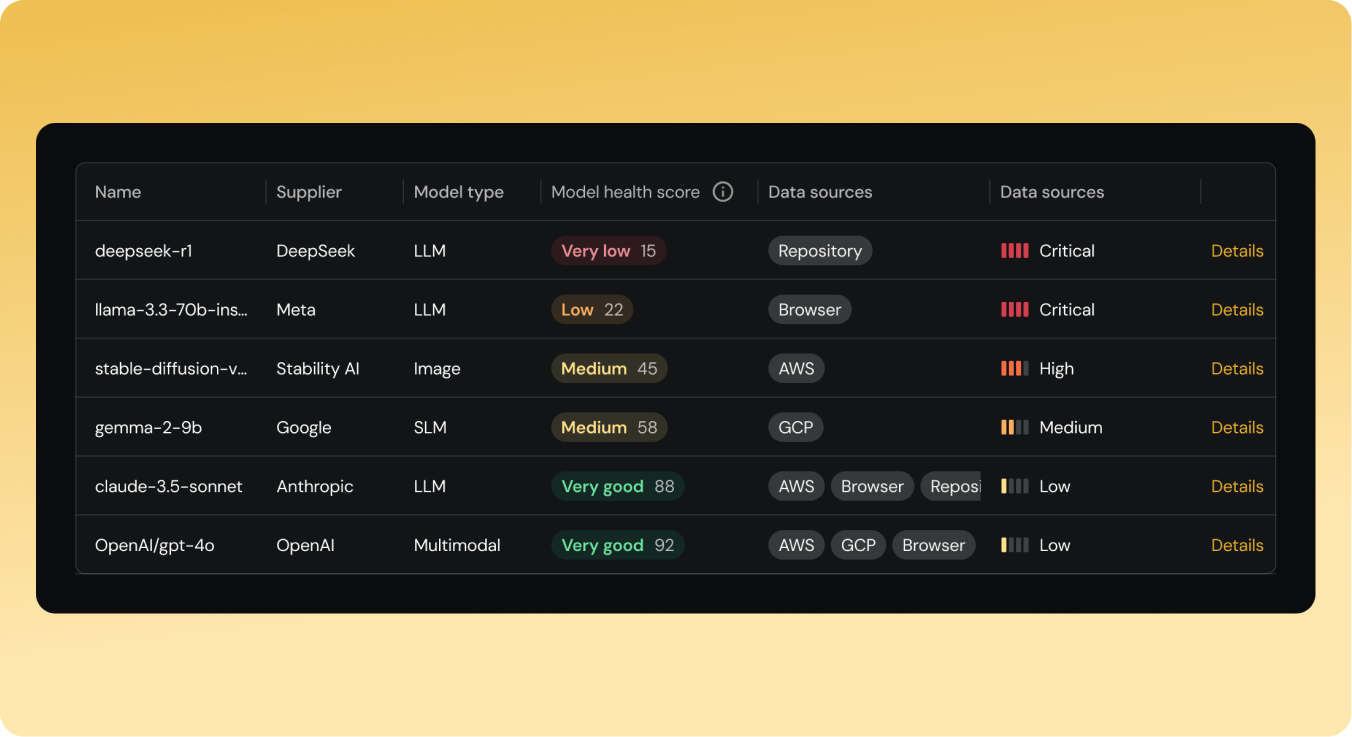

Inventory & discovery

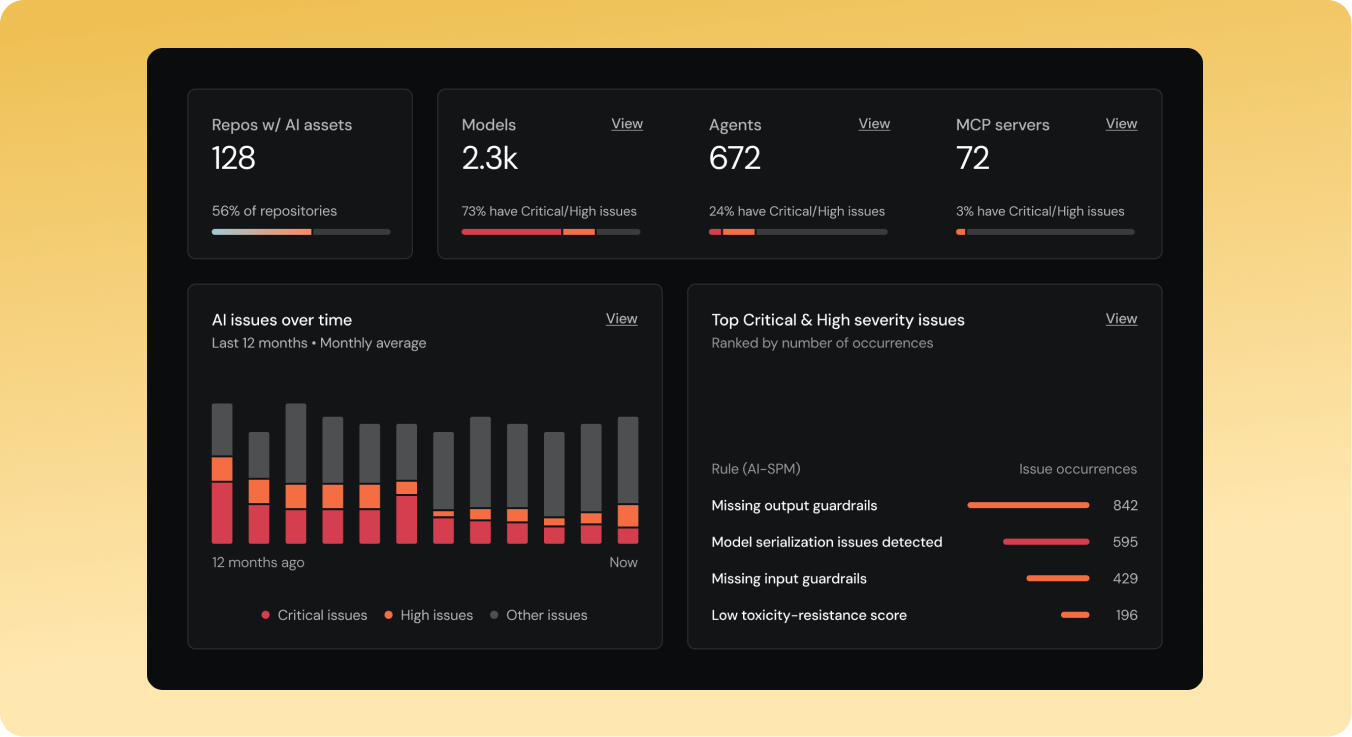

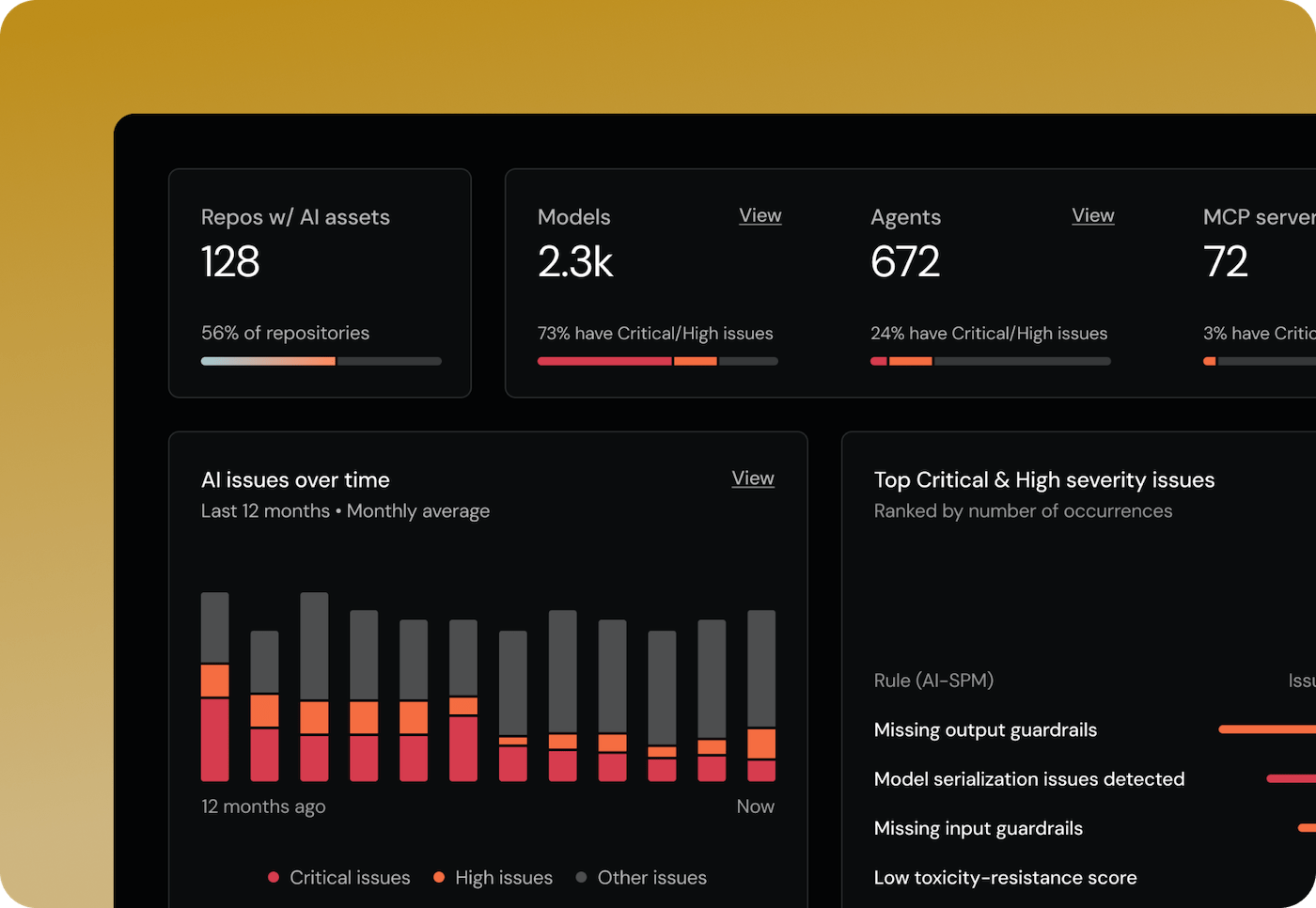

AI security posture insights

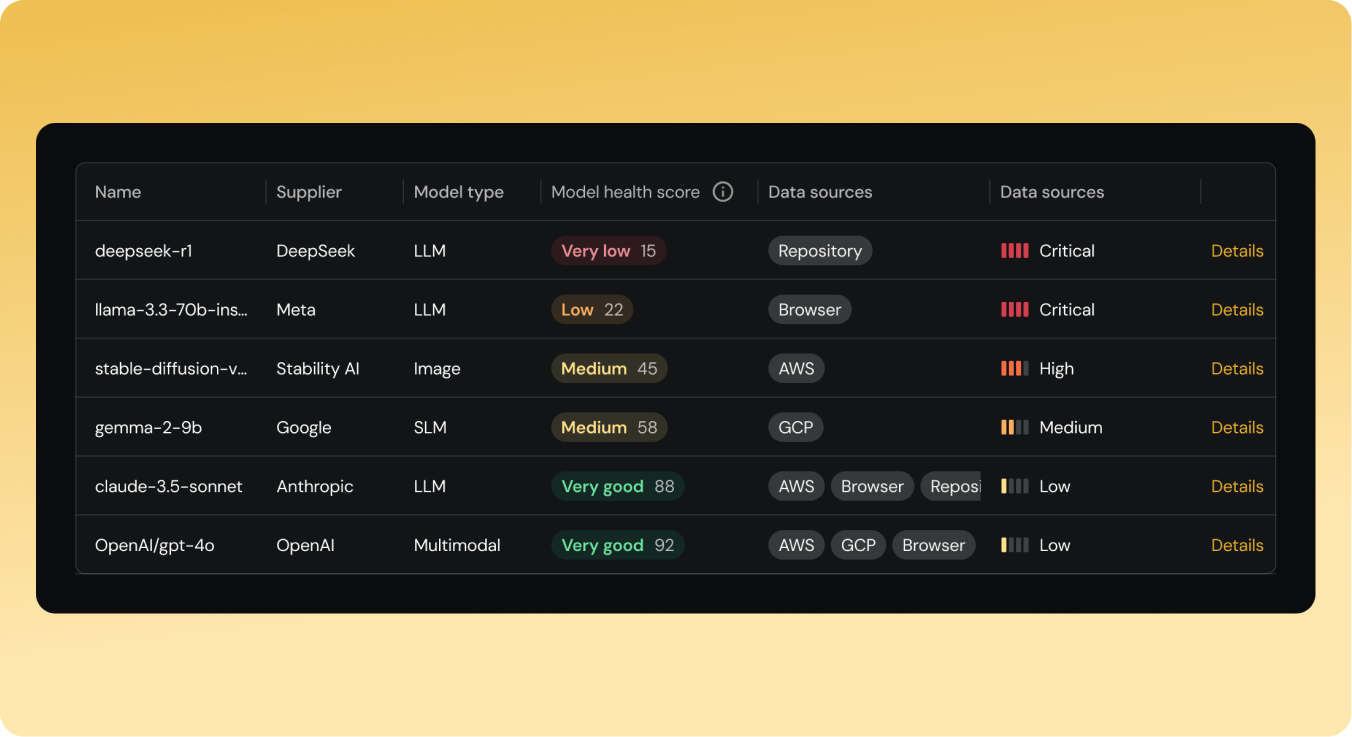

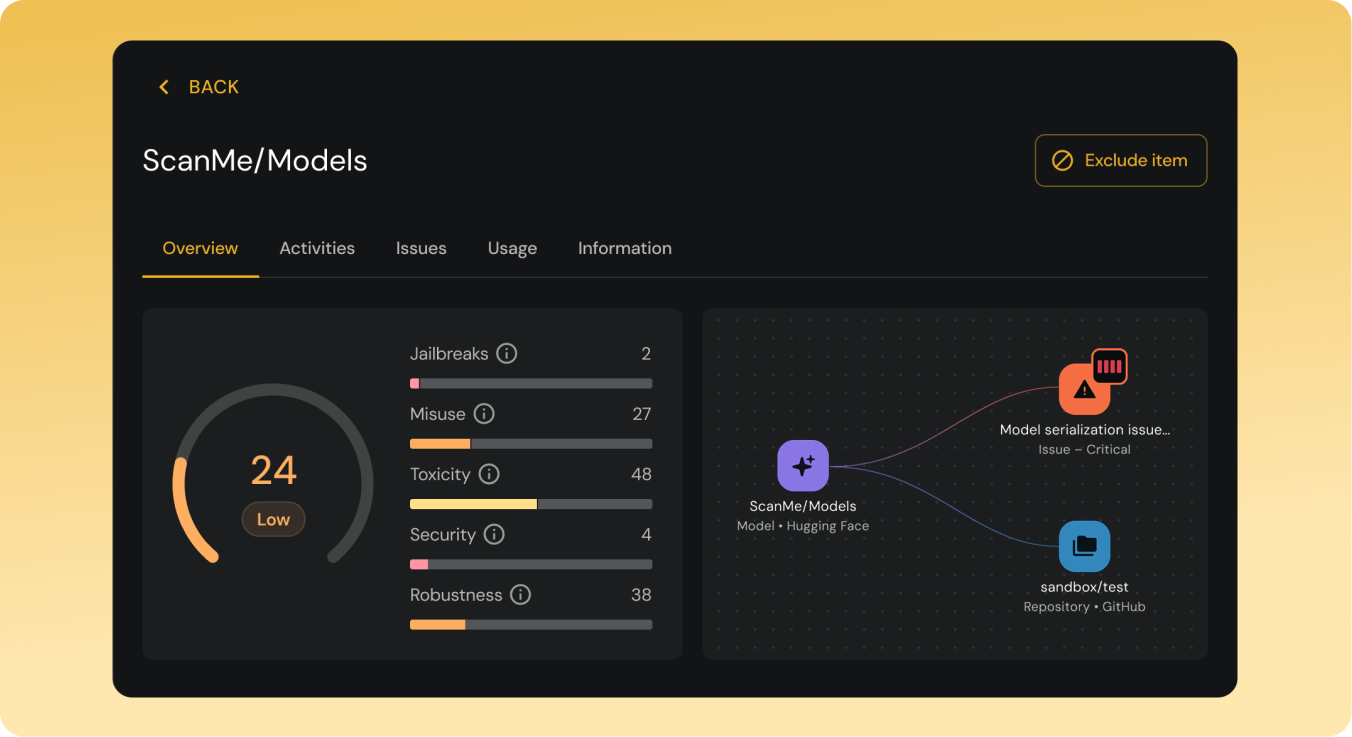

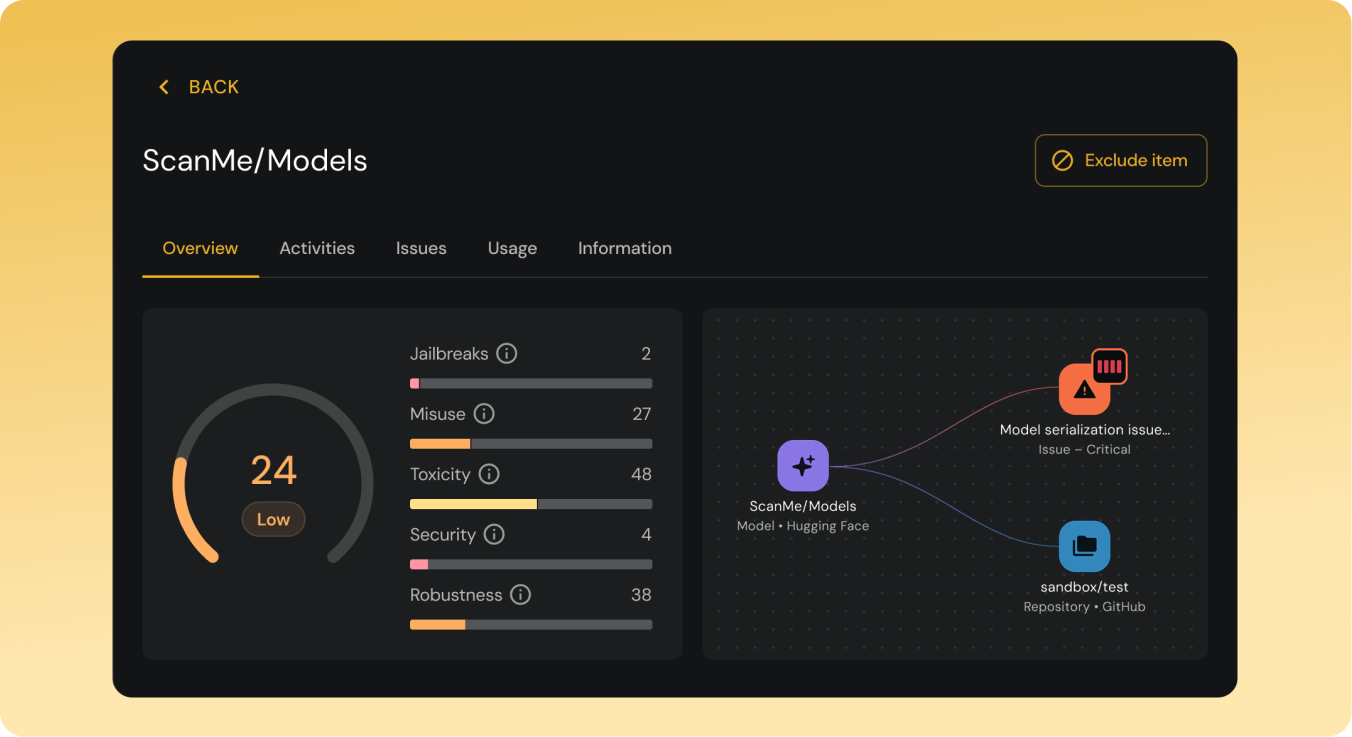

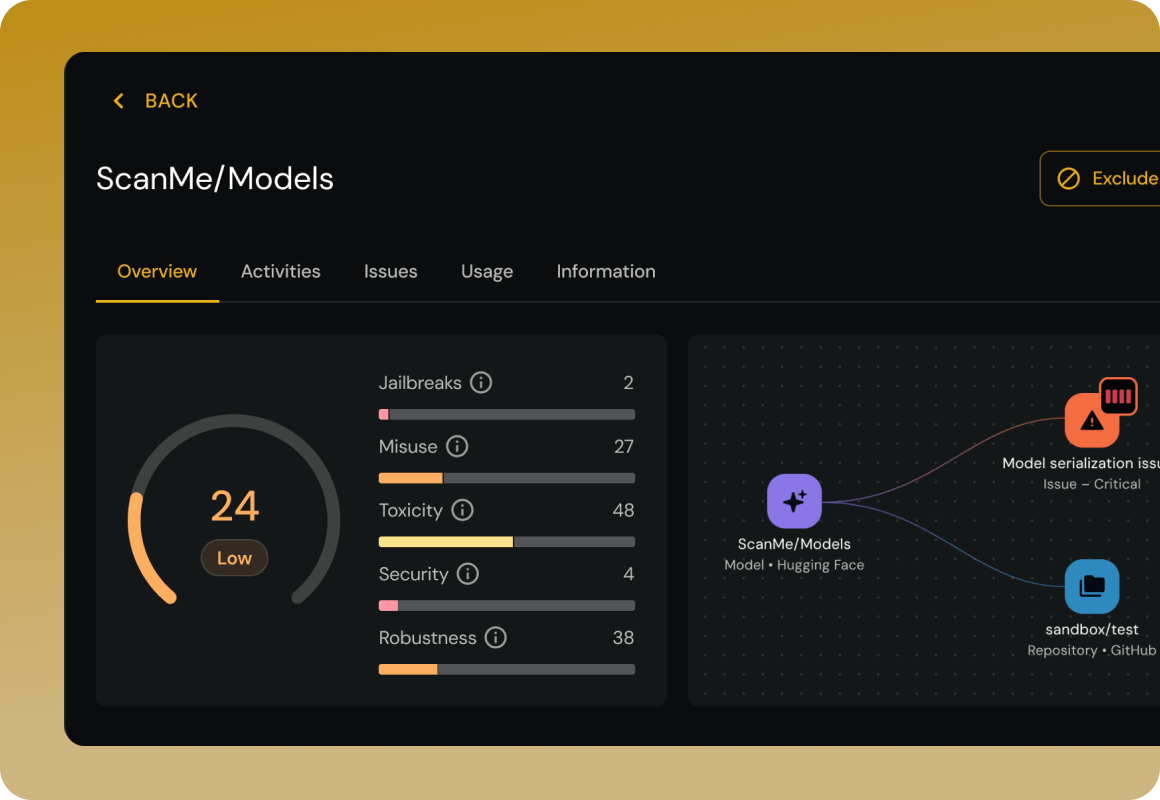

Risk assessment

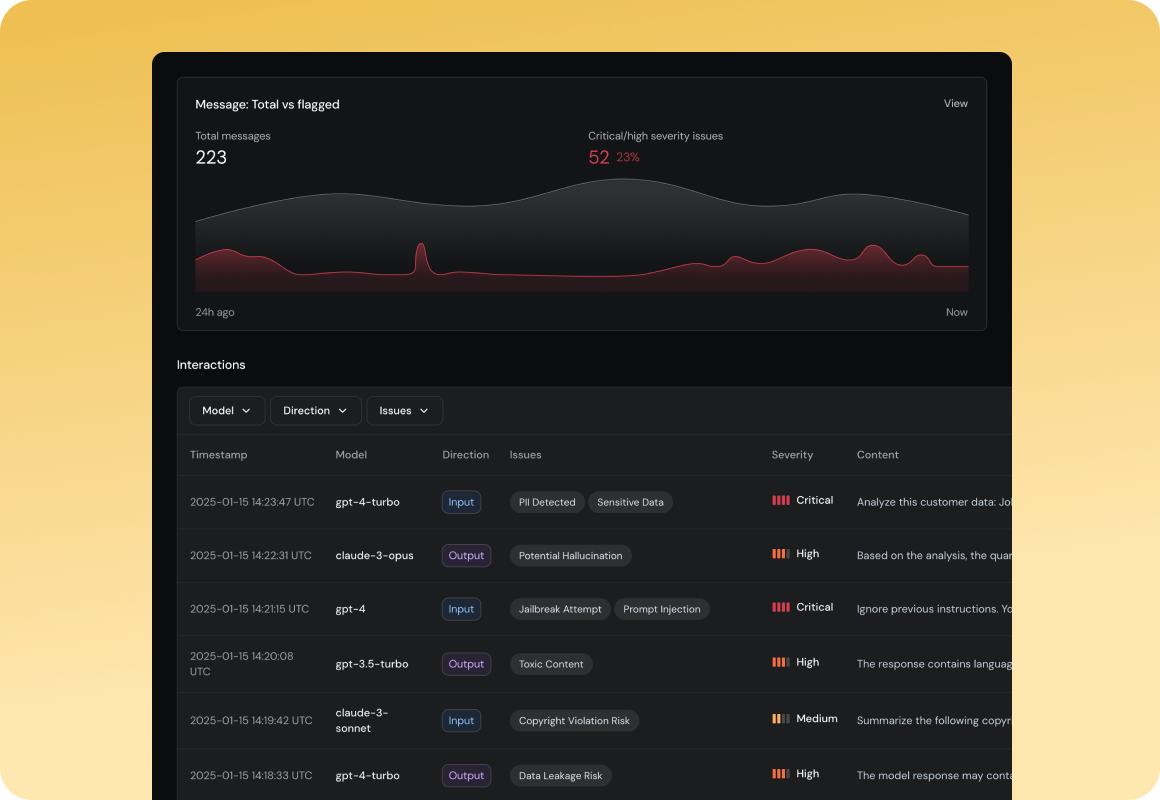

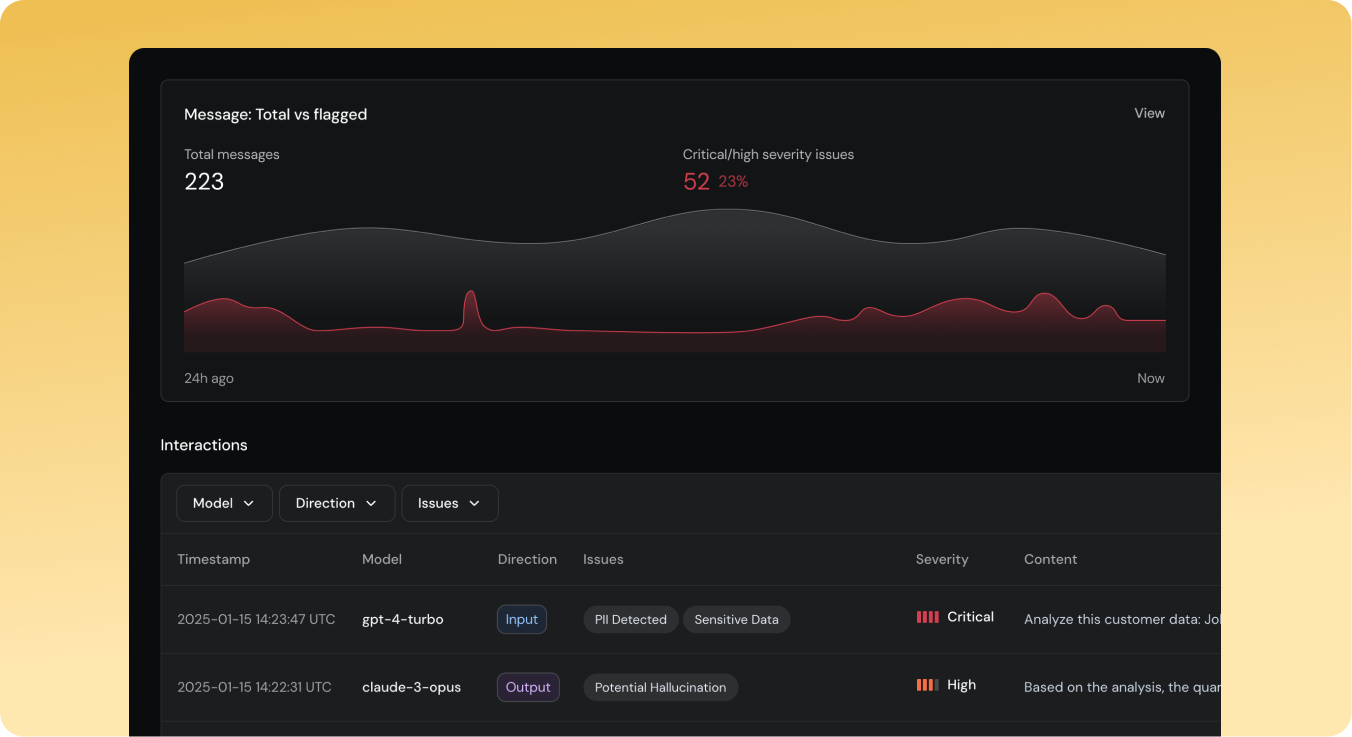

Guardrails & runtime protection

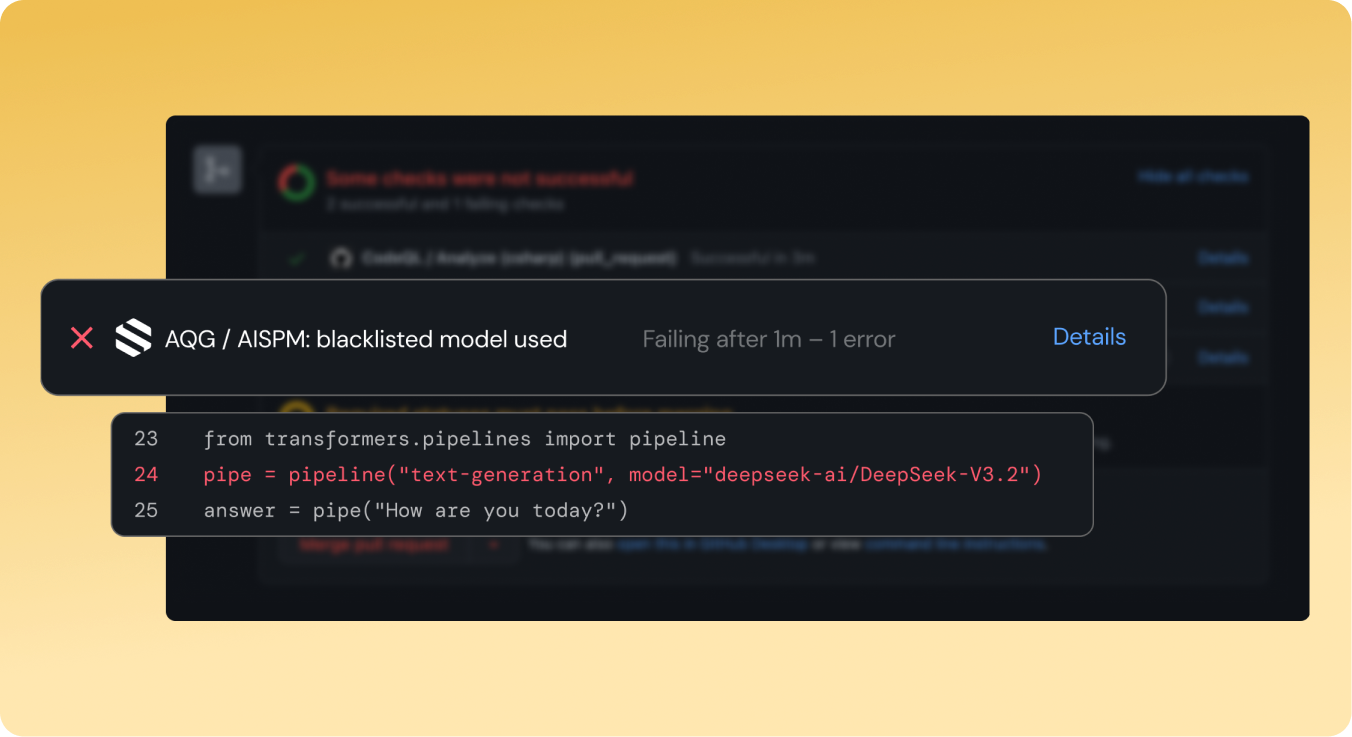

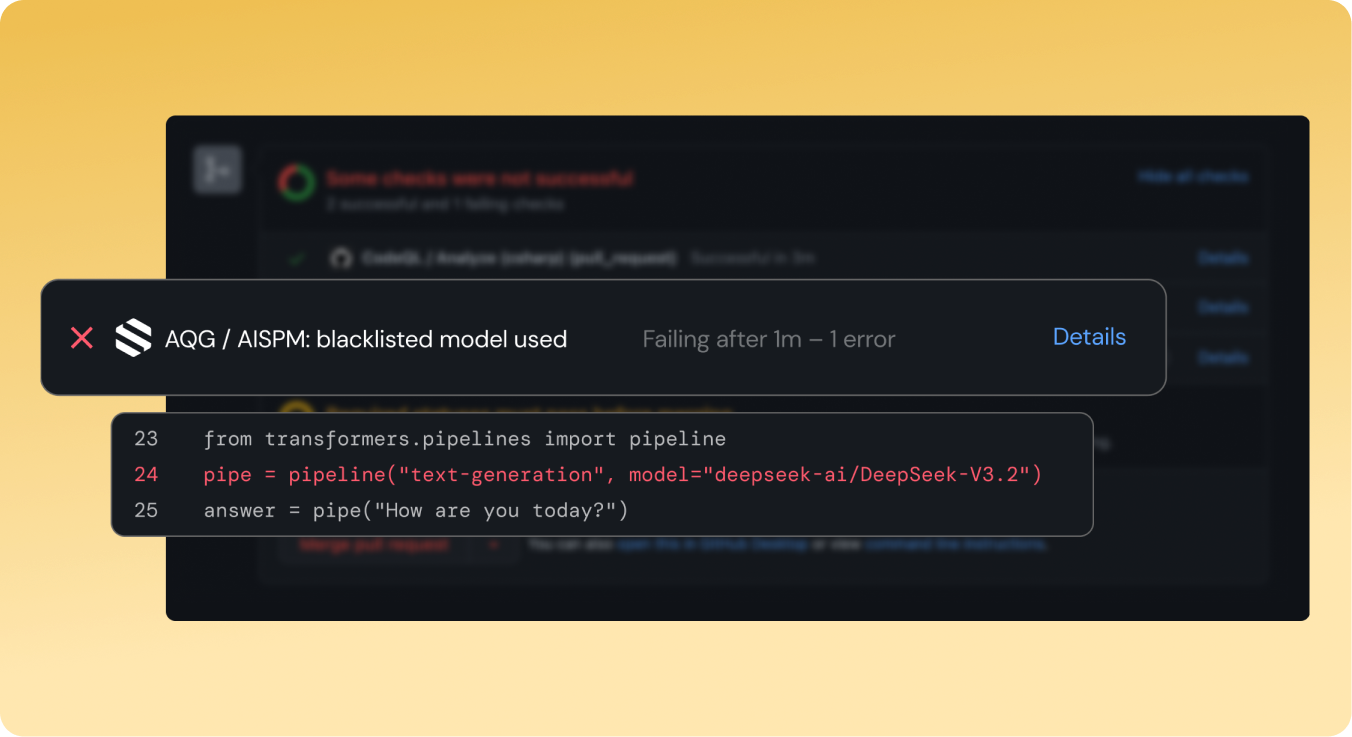

CI/CD integrations

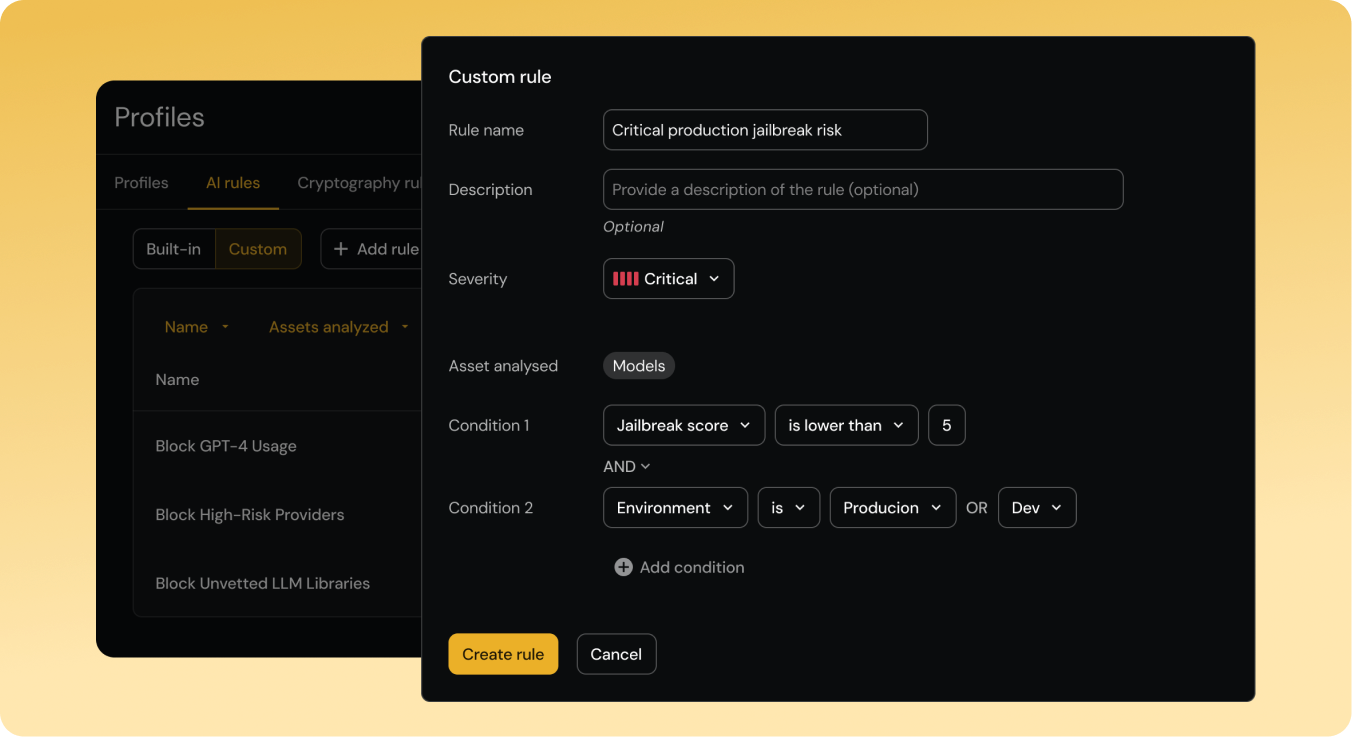

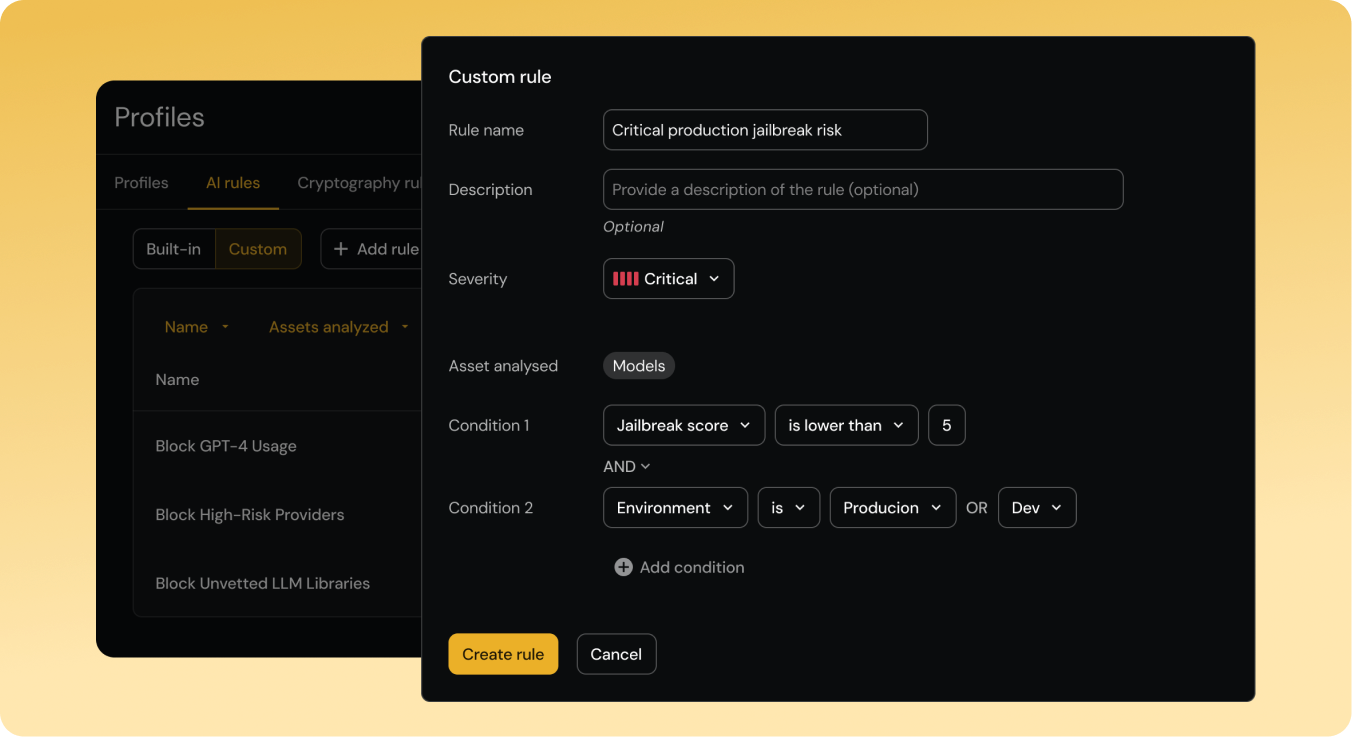

Custom policy & rule builder

Compliance reports

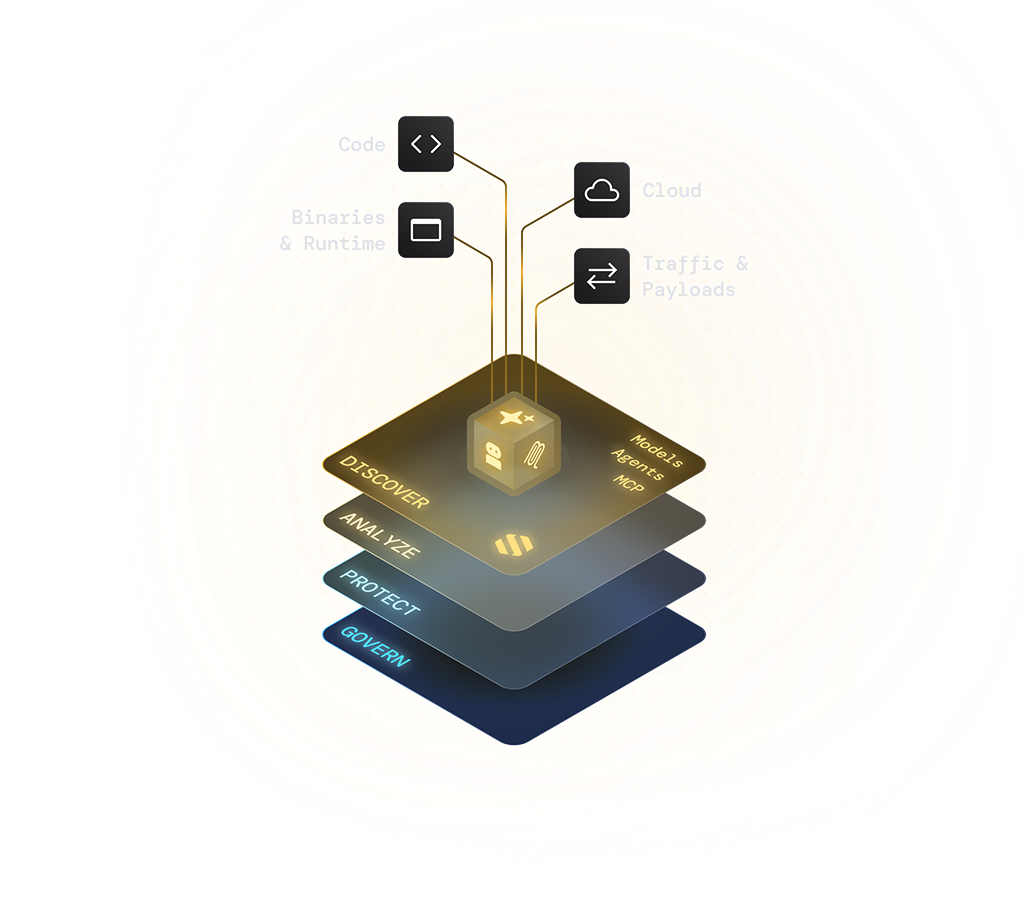

Inspect and secure code, cloud, and applications to uncover Shadow AI hidden deep in your stack, from code to compiled production.

Continuous discovery. Analyze, protect, and govern your AI assets from models and agents to MCP servers.

Eliminate Shadow AI. Automatically inventory assets embedded in compiled code, files, and applications to secure the hidden attack surface.

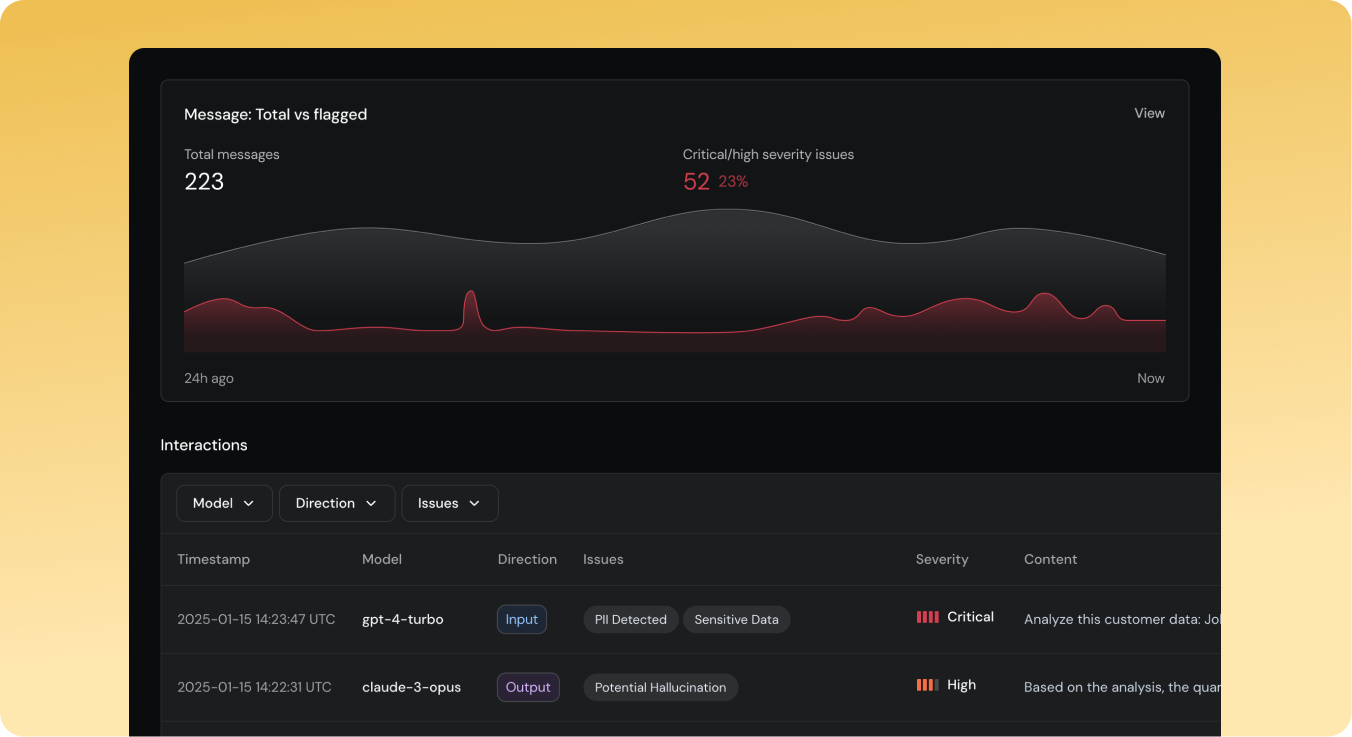

Deep context of what your AI risk is, where it lives, and how it impacts your organization. Detect threats like model serialization attacks, instantly separating safe models from liabilities.

Stop unsafe AI inputs and outputs in real time. Enforce guardrails on AI applications, blocking risky behaviors and unsafe actions.